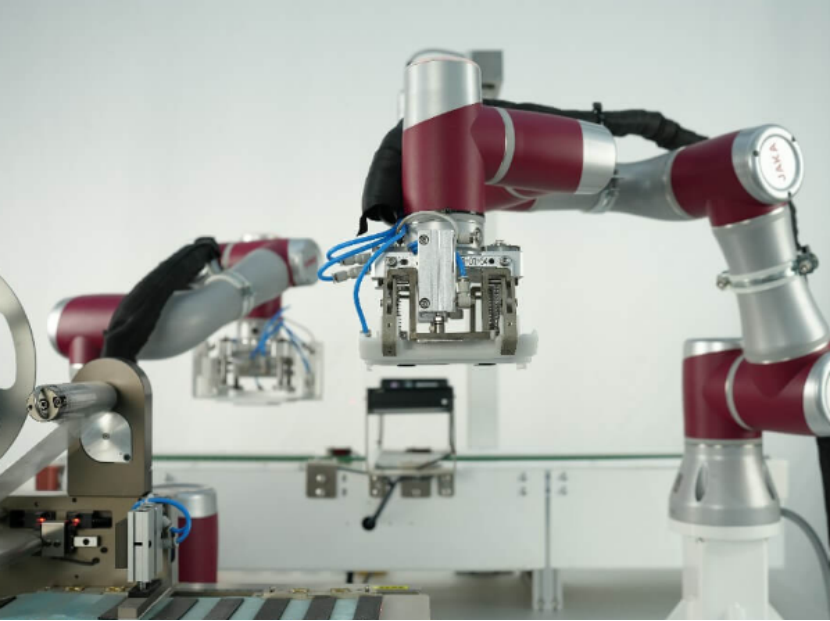

Effective integration of a vision system can redefine the capabilities of a collaborative robot. This process extends beyond simple hardware addition to encompass system design, programming philosophy, and ongoing management. We at JAKA propose seven specific, actionable strategies to enhance the operational efficiency of your vision-guided cobot workcell.

Tip 1: Begin with a Comprehensive Process Audit

Prior to selecting hardware, conduct a detailed analysis of the task. Define the part variations, required recognition tolerances, and environmental conditions. This audit informs the necessary field of view, camera resolution, and most critically, the lighting solution, setting a factual foundation for all subsequent steps.

Tip 2: Prioritize Sub-Millimeter System Calibration

The spatial relationship between the camera, robot, and world coordinates must be defined with high precision. Invest time in a meticulous calibration procedure using certified artifacts. Accurate calibration minimizes spatial error propagation, ensuring the vision-derived coordinates reliably guide the robot’s end-effector to the intended target position.

Tip 3: Engineer Robust and Consistent Lighting

Treat lighting as a core system component, not an accessory. Design illumination that eliminates shadows, reduces glare, and maximizes feature contrast for the vision algorithm. Consistent lighting is a prerequisite for stable, reliable image acquisition, which directly determines the cobot’s positioning consistency over long production runs.

Tip 4: Implement Conditional Logic Based on Vision Data

Program the robot to make operational decisions using camera input. This logic can include part identification, adaptive grip point selection, or pass/fail routing. By embedding this intelligence, the collaborative robot cell gains flexibility, automatically adapting to variations without manual reprogramming.

Tip 5: Develop Autonomous Error Recovery Routines

Anticipate common failure modes like part misalignment or missed picks. Program the system to execute a predefined recovery sequence—such as a re-scan, a gentle nudge, or a safe retry—without operator intervention. This capability significantly reduces unplanned downtime and maintains workflow continuity.

Tip 6: Leverage the Robot’s Mobility for Enhanced Perception

Utilize the cobot’s dexterity to improve vision outcomes. Strategies include using an eye-in-hand configuration for inspections in confined spaces or programming the robot to present a complex part at an optimal angle to a fixed camera. This active positioning increases the vision system’s first-look success rate.

Tip 7: Establish a Schedule for Periodic System Re-validation

Performance can drift due to environmental changes or component wear. Implement a routine to periodically re-check calibration accuracy and re-tune vision parameters against a master sample. This proactive maintenance sustains the system’s designed accuracy and efficiency over its entire operational lifecycle.

Optimizing a vision-integrated cobot is a systematic endeavor that benefits from a structured approach. These seven tips provide a framework that spans initial design, intelligent programming, and sustained maintenance. Our focus at JAKA on high-precision control and ease of system integration supports the practical implementation of these strategies, aiming to deliver resilient and perceptive automation solutions.